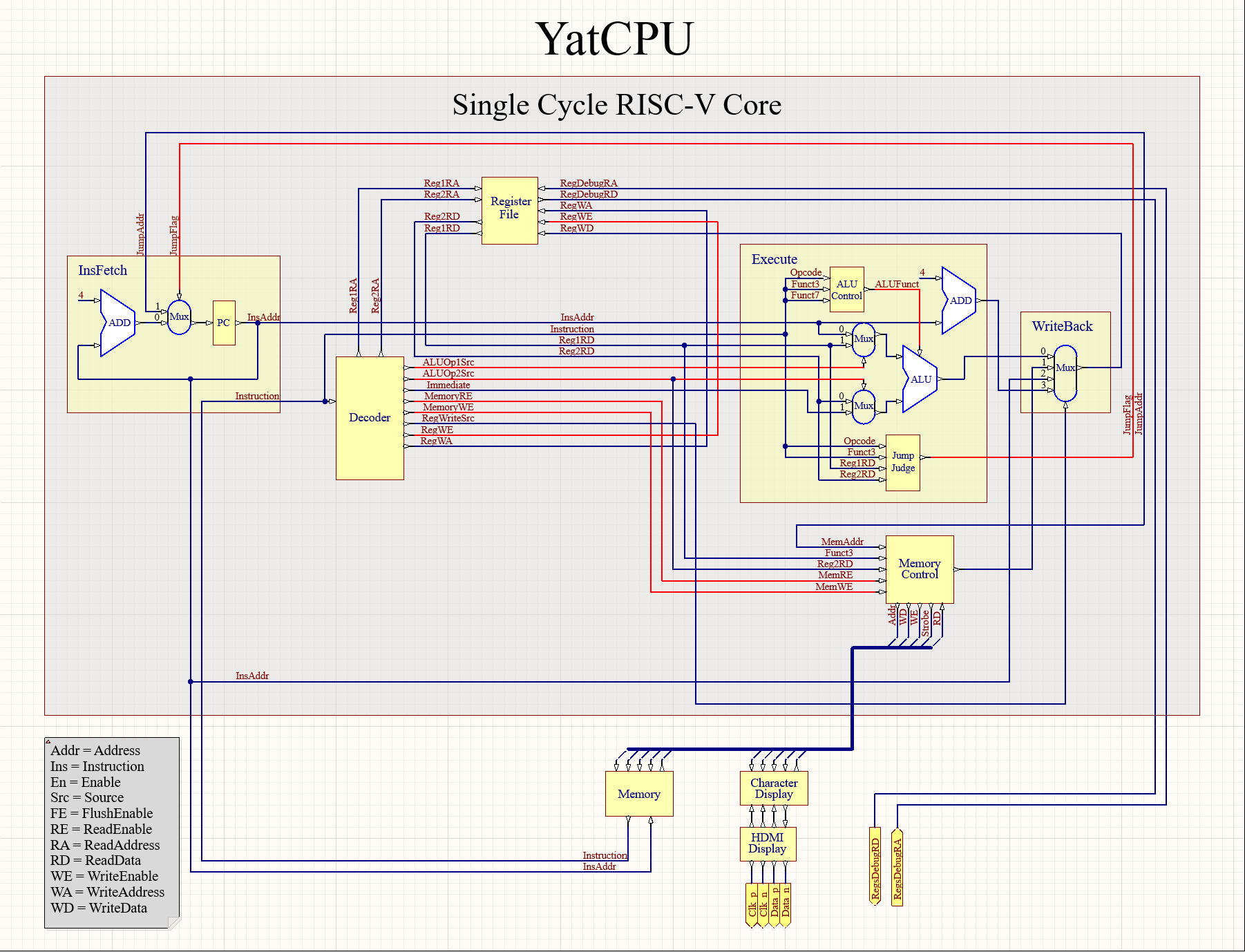

Polynomial Curve

Fitting

\[

y(x,\vec w) = w_0 + w_1x + w_2x^2+\cdots+w_Mx_M =

\displaystyle\sum_{j=0}^{M}w_jx^j

\] minimize an error function that measures the misfit

between the function \(y\).

One simple choice of error function, which is widely used, is given

by the sum of the squares of the errors between the predictions \(y(x_n, \vec w)\) for each data point \(x_n\) and the corresponding target values

\(t_n\) : \[

E(\vec w) = \dfrac{1}{2}\displaystyle\sum_{n=1}^{N}[y(x,\vec w)-t_n]^2

\]

find \(\vec w^\star\) such that

\(E(\vec w)\) is as small as

possible.

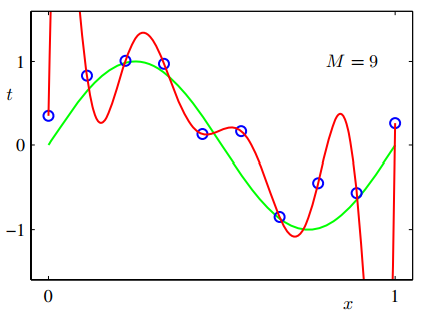

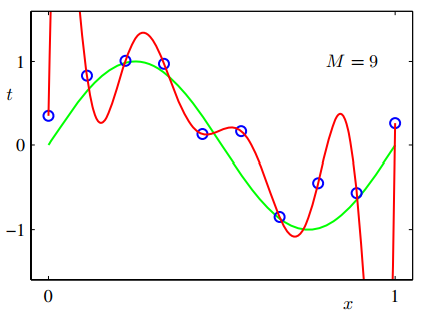

choose \(M\) : model comparison

or model selection

overfitting:

RMS: \[

E_{RMS} = \sqrt{2E(\vec w^\star)/N}

\] By adopting a Bayesian approach, the

over-fitting problem can be avoided.

Indeed, in a Bayesian model the effective number of parameters adapts

automatically to the size of the data set.

regularization

\[

\tilde{E}(\vec w)= \dfrac{1}{2}\displaystyle\sum_{n=1}^{N}[y(x,\vec

w)-t_n]^2 + \dfrac{\lambda}{2}\lVert\vec w\rVert^2

\] the coefficient \(\lambda\)

governs the relative importance of the regularization term compared with

the sum-of-squares error term.

Also known as ridge regression.

Bayesian

Probabilities

\[

p(\mathbf {w}|\mathcal{D})=\dfrac{p(\mathcal{D}|\mathbf w)p(\mathbf

w)}{p(\mathcal D)}

\]

\[

p(\mathcal D) = \int p(\mathcal{D}|\mathbf w)p(\mathbf w)\mathrm d

\mathbf w

\]

\[

\text{posterprior}\varpropto \text{likelihood}\times \text{prior}

\] Maximum Likelihood : choose \(\mathbf w\) to maximize \(p(\mathcal D|\mathbf w)\), error

function with negative log.